Ultra-Fast Sentiment Analysis with Tiny Embeddings

At Narnium, we tackle hard problems with the clever use of small, efficient, domain-specific AI models. A question we recently encountered involved classifying natural language product reviews into positive and negative categories.

We were curious to see how some publicly available, pre-trained small language models would fare on this task. So we set out to perform a fair, general, systematic, multi-dimensional evaluation of the most popular open-weight models, and compare them with respect to accuracy, training and inference speed, and on-disk size. We wanted to share with you our most important discoveries, as well as some actionable insights you can readily apply to your next machine learning project.

TL;DR

We achieved a 93.5% accuracy on the Amazon Reviews dataset using the best neural model. Meanwhile, our best static model, which can process text at 1.3 MB/s, still achieved an accuracy of 86%.

The best part is, you can do this too! We recommend using one of the following setups:

- For maximal accuracy, use Nomic Embed Text v1.5 for embeddings, and Ridge logistic regression as the downstream classifier, optimizing the regularization coefficient with cross-validation. This combination achieves an a balanced accuracy of 0.935 and a Matthew's correlation coefficient (MCC) of 0.867, while being able to process approximately 2100 bytes per second. (We primarily use the MCC for evaluation of predictive power, as it is the most representative and balanced of all the commonly-used metrics.)

- For a slightly less accurate, but around 3x faster alternative, use Multilingual-E5-Small for the embeddings instead, achieving an MCC of 0.844 and a throughput of 5900 B/s.

- For a blazing fast but somewhat less accurate pipeline, distill Nomic Embed Text v1.5 using

the

Model2Vecmethod, or use Potion Retrieval 32M, then train a linear discriminant analysis (LDA) classifier with the shrinkage coefficient selected automatically byscikit-learn. (For the curious, this is the value based on Ledoit and Wolf's famous paper.) This approach achieves an MCC of 0.736 and a balanced accuracy of 0.86, while generating embeddings at 1.3 MB/s and 1.75 MB/s, respectively, or around 800 times faster than the most accurate neural model.

These are quite good results, especially in the light of the simplicity of the approach and the small size of the lightweight language models. Read on for the full story!

The Approach

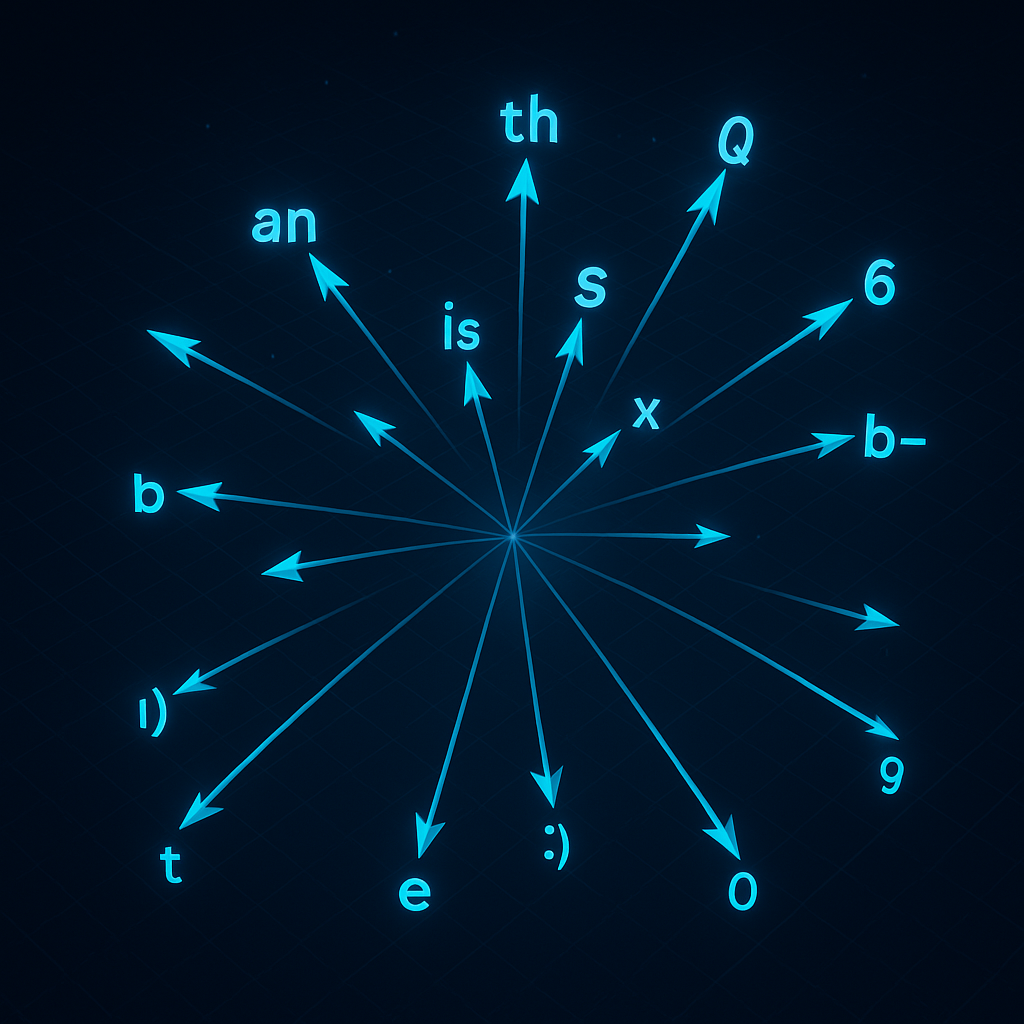

We used a simple two-step transfer learning method:

- Generate vector embeddings for the reviews, using a sentence embedding model.

- Train a simple downstream classifier on the resulting embeddings.

We performed 10-fold cross-validation over the data, which allowed us to accurately assess the predictive power of each individual model, while retaining all of the data for both training and testing (once the cross-validation process is complete), by splitting it into 10 disjoint, random subsets, and using a different one for testing in each iteration ("fold").

All metrics reported in this analysis were computed by averaging the results of the test scores of the 10 folds. The cross-validation was constrained (grouped), so that reviews by any specific user never appeared simultaneously in both the train and the test set. It was thus ensured that models didn't just learn individual users' style.

The following figure summarizes the setup:

Throughput and training/inference performance was tested on a MacBook Pro with the M3 Max CPU with 10+4 cores and 36 GB of RAM.

The Models

First, let's talk embeddings. We used some of the most popular, small, publicly available text embeddings from Hugging Face Hub:

- Some of them are based on a sophisticated transformer-based or encoder-only neural network architecture, such as Nomic Embed Text v1.5 or Paraphrase MPNet V2.

- Many of them, however, are very fast and simple static models, such as the MRL multilingual static embeddings, or various Model2Vec distillations of full, context-aware, neural models, e.g., Minishlab's Potion Retrieval 32M.

- We also used FastText's 300-dimensional static embeddings as a baseline, as FastText is a relatively old library using a variant of Word2Vec, a classic NLP technique.

- Finally, we also evaluated Apple's Natural Language framework, specifically, the

NLContextualEmbeddingclass, to see how a proprietary, black-box model compares to the open-weight alternatives. This model produces individual embeddings at the token level, which we aggregated using mean pooling, since that is what most of the other models do.

FastText and the Apple NL framework do not require a separate runtime. For the Model2Vec static models, we used our own, improved implementation, while for the neural models, we used the Fastembed runtime, both written in Rust.

The full list and of text embedding models and their short explanation can be found in this table.

As for the classification models, we used the simplest, traditional classifiers from sklearn, initialized with the following sets of parameters:

models = [

LinearDiscriminantAnalysis(solver='lsqr', shrinkage='auto'),

QuadraticDiscriminantAnalysis(reg_param=0.001),

LogisticRegressionCV(max_iter=1024, n_jobs=-1, random_state=133742),

RandomForestClassifier(n_jobs=-1, random_state=133742),

HistGradientBoostingClassifier(random_state=133742),

]

The Data

We used the excellent Amazon Reviews 2023 Dataset in our experiments. This is a large, cleaned, labeled, open-access dataset. It contains at least 10s of thousands, or even millions of reviews (title, text body, and a 1-5 star integer rating) of various categories of products, sold on Amazon.

To simplify our task, we grabbed the Subscription Boxes category, restricted to unambiguously positive (4 or 5 stars) and negative (1 or 2 stars) reviews. Neutral reviews with 3 stars were discarded. This left us with a grand total of 16216 reviews, about 75% of which were positive.

As an example, a subset of the final, cleaned data frame is available here.

Raw Results

First of all, let's have a quick glance at the best results. The best value(s) of each column is/are in bold:

| embedding | estimator | Bal. Acc. | MCC | fit_time | score_time | embedding_throughput |

|---|---|---|---|---|---|---|

| fastembedMultilingualE5Small | lda | 0.918 | 0.833 | 0.165 | 0.004 | 5861 |

| logistic_regression | 0.921 | 0.844 | 2.685 | 0.004 | 5861 | |

| fastembedNomicEmbedTextV15Q | lda | 0.933 | 0.865 | 0.506 | 0.004 | 2106 |

| logistic_regression | 0.935 | 0.867 | 6.261 | 0.008 | 2106 | |

| m2vNomicEmbedTextV15 | lda | 0.859 | 0.737 | 0.550 | 0.004 | 1319162 |

| logistic_regression | 0.870 | 0.750 | 8.119 | 0.008 | 1319162 | |

| m2vPotionRetrieval32M | lda | 0.860 | 0.736 | 0.369 | 0.005 | 1748334 |

| logistic_regression | 0.865 | 0.743 | 4.851 | 0.008 | 1748334 |

The legend for the performance metrics is as follows:

fit_time: the time it took to train the classifier, in seconds (excludes embedding time)score_time: the time it took to perform inference, in seconds (excludes embedding time)MCC: Matthew's Correlation Coefficient, as discussed aboveBal. Acc.: Balanced Accuracy, the average of sensitivity (true positive rate) and specificity (true negative rate)F1: F1 score, harmonic mean of precision and recall. (Only in the full tables, see below.)Tjur R^2: Tjur's pseudo-R2, a not very common, but useful and intuitive metric: the difference between the mean predicted probabilities of the positive and negative classes in the hold-out test set. (Only in the full tables, see below.)

If you want to dive deep into the numbers, the complete set of timing and accuracy metrics can be found in these tables. In these tables, all sheets except the last one ("All Metrics") are sorted from best to worst. (The last sheet, containing all metrics, is sorted alphabetically by embedding and classifier.)

Some of the sheets are named according to the scheme <metric> by embedding, where <metric> is

the name of an individual performance (accuracy or speed) metric. The tables present the best

(i.e., minimum or maximum, as appropriate for the given metric) values aggregated along the axis

of classifiers, so that for each embedding model, the best score it achieved with any classifier

is displayed.

In contrast, every other sheet is named using the pattern <metric> by estimator. These tables

aggregate and compute the maximum over embeddings, so you can use them to assess the performance

of each individual downstream classifier model, paired with the embedding it performed best on.

The sheet named All Metrics contains all computed performance scores, for all combinations of

embedding and classifier models, without any max- or min-aggregation.

The throughput of the embedding models can be found in this table. Both the median and the mean throughput are presented in units of B/s (bytes per second). The median values are likely a better representation of embedding speed, because the averages may include model loading and warm-up time.

Main Take-aways

The Nomic Embed Text v1.5 embedding model is the most accurate contestant, both in its original

(neural) form, and among the significantly faster, distilled, static Model2Vec models as well.

Unfortunately, in the non-distilled neural form, it's the slowest model; Multilingual-E5-small

is almost 3x faster, in exchange for a slight loss of accuracy, around 2.5% points. Curiously,

the Model2Vec distillation of Nomic Embed Text v1.5 is among the smallest models, at a whopping

91 MB. The only smaller model is minishlab/M2V_base_output, with its tiny, 30 MB file.

Among the static models, Potion Retrieval 32M and static-similarity-mrl-multilingual-v1 are

decent alternatives, too. They are worth trying for other tasks (such as clustering and semantic

similarity search). Do note, however, that static models are still somewhat less accurate than

transformers.

The Apple Natural Language framework didn't perform particularly well on either metric. Both its accuracy and its embedding throughput lag behind the competition: in terms of accuracy (MCC, F1 score, balanced accuracy, pseudo-R2), it can't beat the top 1…3 static models, while being significantly slower.

As for the downstream classifier, there seems to be no reason to use anything beyond simple, linear models. Logistic regression wins in terms of accuracy most of the time, closely followed by LDA, while LDA is a lot (~20x) faster to train, thanks to the lack of hyperparameter tuning.

Gradient boosting usually exhibits similar or slightly lower accuracy; however, it is an order of magnitude slower to train and evaluate. Meanwhile, QDA somewhat overfits in several cases, and random forest catastrophically overfits most of the time, and it is the slowest to train.

In the above experiments, QDA was initialized with a constant regularization coefficient of 0.001, which is on the same order of magnitude as the typical Ledoit-Wolf coefficient, when computed on all embeddings of the whole dataset. Apart from this eyeballed constant value, we also tried more sophisticated approaches (e.g., average of class-wise Ledoit-Wolf shrinkage coefficients weighted by class priors). Interestingly, these more complicated approaches didn't meaningfully affect the results: sometimes, they slightly increased performance, while in some other cases, they slightly decreased the overall predictive power, so in the end, we decided to keep the constant shrinkage.

Caveats and Limitations

We are aware of at least the following notable potential issues with our study:

-

Temporal contamination: since language evolves over time, it would be the cleanest to sort reviews by timestamp, and then only train on earlier samples and predict on later samples. This, however, is non-trivial; "how to split time series" is a whole other dimension to this problem. Consider, for example, what happens when the same user made reviews both before and after the timestamp defining the train-test split.

We checked whether it's possible to predict just the time using a simple, binary target, where the first (oldest) half of the data were labeled 0, and the second (newest) half were labeled 1. Indeed, there is some signal, as seen in this table. We also checked manually, on a single 90/10% train-test split, that training on earlier samples and predicting on later samples does have a moderate negative impact on the predictive power: the models lose around 2…5% points of accuracy, depending on the particular performance metric. Although this impact is not huge, it does suggest that in a real-world setting, periodic re-training of the prediction models would be necessary, so as to keep up with the continuously changing nature of language.

-

Dataset Size and Diversity: In the interest of time, we trained and evaluated the models on the smallest dataset, ie., the "Subscription Boxes" collection. Results here may not generalize to other datasets, so you are encouraged to experiment with your own data, especially when it's domain-specific or more diverse than reviews involving a single, narrow category of products.